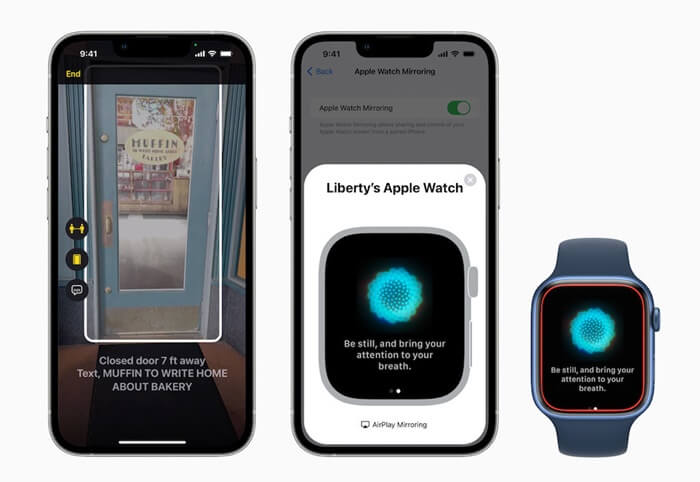

Some of these include a live caption for calls, videos, etc when the device is offline, door detection to spot a door nearby and read text on it, and the ability to view a paired Apple Watch screen on your iPhone. All these are coming soon, probably with iOS 16.

Apple’s New Accessibility Features

For a long, Apple is appreciated its continued support for helping disabled users with a range of accessibility features. Adding to these are the new bunch of features as below, which may help users better;

AirPlay Apple’s ability to stream content from one device to another is coming to Apple Watches, where any user with a Series 6 or newer model can see their Watch screen on a paired iPhone.

This is very much helpful since the screen of Apple Watches (all smartwatches basically) are small.

Door Detection With LiDAR-enabled iPhones or iPads, Apple users will soon be able to measure how far a door is, know if it’s open or closed, and scan the text written on it. Users with limited vision get better benefited from this support, as navigation around them becomes easier. Offline Live Captions Apple is adding live captions support in iPhones, iPads, and Macs in offline mode, so users with poor or no internet connection can see the audio transcribed for a better understanding of the playing content. This is applicable to all the calls, videos, and most apps.

This feature is so sophisticated that, Apple can even attribute captions to the respective speaker in group FaceTime calls! For macOS users, users can make the device read out loud what they just typed. This support is only available for iPhone 11 or newer, an iPad with the A12 Bionic chip or a later model, and a Mac with an M1 chip. This is because the live transcription of audio offline needs stronger processors to do the job, and Apple’s own silicon is the only one that can do it. Though Apple didn’t specify when exactly these features will be rolling out, expect them to arrive in the iOS 16.